Our clients regularly ask us to review links built by other services… So I’ve seen A LOT.

And you’d be amazed (or not) about how terrible most are.

Something like 80% fail my quality check.

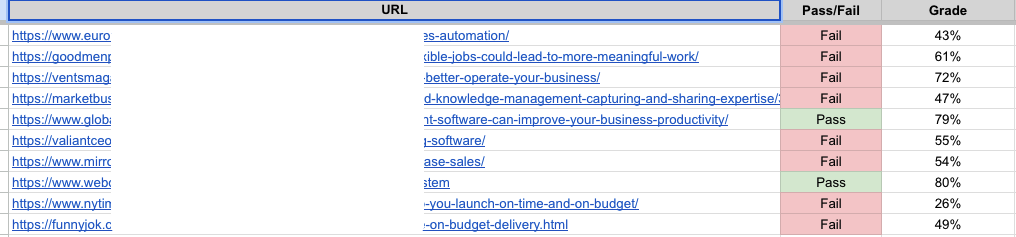

A typical report I send back looks like this:

The links come from sites with good metrics (DR, traffic) and are often pretty relevant, so none of that is the issue.

The BIG issue, imo, is that they spam low quality outbound links like there’s no tomorrow.

Total link farms. They exist solely to sell links, and boy oh boy do they sell to just about anyone.

If you’ve done link building or used any services, you’ll know the sort I mean.

My theory is they have such a strong pattern of spam, that it’s easy for Google to detect and then ignore.

So I decided to test this theory…

ChatGPT told me that any good experiment should start with a hypothesis.

Mine is: Google will detect & ignore links from “link farms”, even if they have good metrics like DR and traffic.

How I ran the test:

The basic idea was to choose a page which ranks for a certain keyword, build some high DR/traffic (yet spammy) links, and monitor the ranking over time.

I chose super low volume, low competition keywords where typically the rankings are very stable – So the impact of our links should be clear.

I chose 5 different keywords, and 1 target page for each keyword.

These SERPs mostly had 0 links for every single result on page 1, so for my test I built just 1 link to each target page.

Links built:

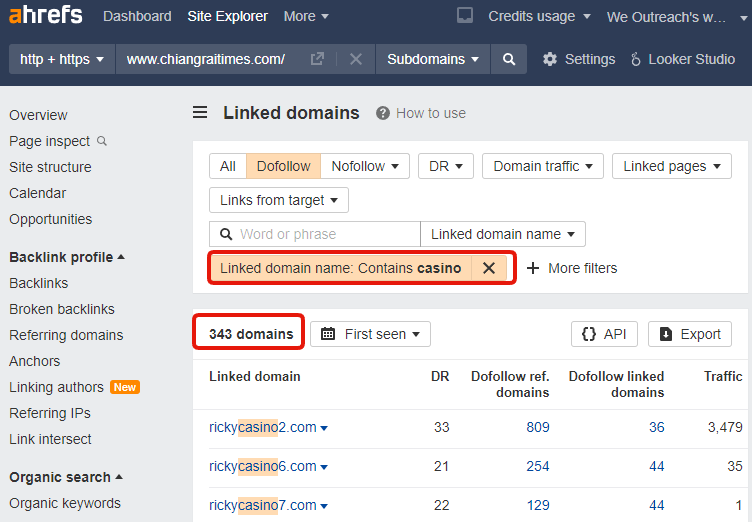

I chose sites with good DR and traffic, but which are pure link farms:

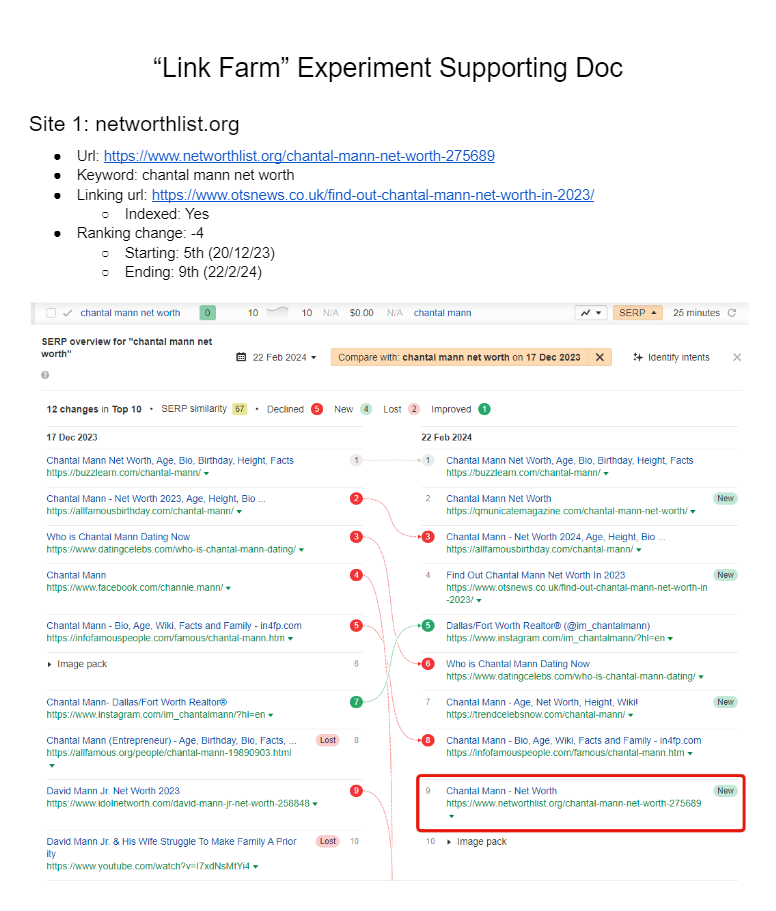

- https://www.otsnews.co.uk/ (DR50 + 10,700 traffic)

- https://marketbusinessnews.com/ (DR77 + 138,000 traffic)

- https://www.sportsgossip.com/ (DR29 + 3,900 traffic)

- https://www.chiangraitimes.com/ (DR70 + 6,700 traffic)

- https://www.truegossiper.com/ (DR30 + 1,800 traffic)

These are really popular sites used by link building services – You’ve probably seen some of them yourself.

Results:

2 months after links being published and indexed by Google, the changes in rankings are:

- Site 1: -4 (5th -> 9th)

- Site 2: +1 (8th -> 7th)

- Site 3: +1 (8th -> 7th)

- Site 4: +1 (5th -> 4th)

- Site 5: +0 (4th -> 4th)

Check this Google Doc for the full details of the keywords, links, and results.

Assessment:

Although there’s a few small ranking changes, if you check the supporting Google Doc you’ll see most of them didn’t come from our pages getting stronger/weaker.

In one case Google added a “people also ask” feature, which caused Ahrefs to reduce the rank of every page on the SERP by -1.

In another 2 cases we accidentally outranked our target page with our guest post page (oops lol), causing a -1.

What I saw here was basically no strong positive or negative impact.

Which for me is… great?

My hypothesis was correct and I am, in fact, a link building savant?

Well alright, calm down Jason!

This was a small, imperfect test… But I’m genuinely shocked to see such little impact in any direction.

I guess the next step is to repeat this test a few more times and in different ways.

What I’d do better next time:

Firstly, I’d try to avoid the whole accidentally parasite-SEOing ourselves thing.

That was dumb.

Secondly, I’d find a better way to do rank tracking. Ahrefs is great but it doesn’t update frequently for these tiny keywords and overall I think we could be more precise.

Thirdly, I might do a Kyle Roof style lorem ipsum test to remove more variables.